Introduction to Automata Theory

The Origin of the Idea

Long before high-level programming languages existed, mathematicians tried to answer a deep question -

“Can we model human reasoning and mechanical computation with formal systems? “

This led to the birth of automata theory, a branch of theoretical computer science that models simple machines capable of understanding structured input.

Key figures in development of the field are -

- Alan Turing (1936): Created the Turing Machine, the simplest possible machine that can simulate any algorithm. This became the formal definition of “computability”.

- Alonzo Church: Worked on lambda calculus, another formal system showing how functions and logic could be expressed.

- Stephen Kleene: Introduced the concept of regular expressions and invented Kleene star (∗) and positive closure (+) which are now core to pattern matching.

- Noam Chomsky (1956): Developed the Chomsky hierarchy, a classification of formal grammars based on their complexity.

These ideas formed the mathematical foundation of compilers, interpreters, and all modern programming languages.

The Building Blocks of Automata Theory

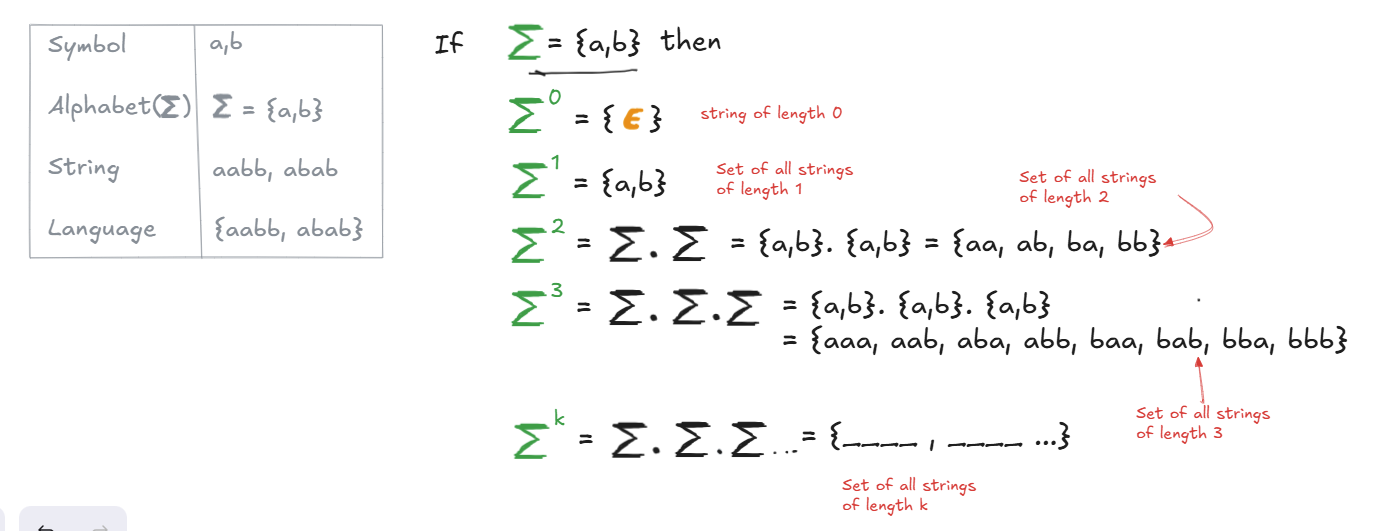

To model how machines understand patterns, lets first start with some basic concepts:

- Symbol: Basic building block which can be character or token. e.g- a,b,1,2 etc.

- Alphabet (Σ): A finite set of symbols. e.g- {a, b} or {0, 1}.

- String: A finite sequence of symbols from an alphabet. e.g- abb, 1010

- Language (L): A set of valid strings over an alphabet. e.g- All strings with an even number of 1s.

Operations

- Concatenation: ab means symbol a followed by b

- Union: a ∪ b means either a or b

- Kleene Closure (*): a* means zero or more a’s (ε, a, aa, aaa, …)

- Positive Closure (+): a+ means one or more a’s (a, aa, aaa, …)

These operations are what make regular expressions possible and regular expressions define the simplest class of languages that computers can recognize.

Why Does This Matter for Developers?

Every compiler’s or interpreter’s initial 2 steps are -

- Lexical analysis - Breaks your code into tokens (e.g.,

while,if,123,=) - Syntax analysis - Checks if those tokens follow the grammar

These first two stages are automata in action. Lexical analyzers use finite automata and regular expressions while Syntax analyzers use context-free grammars. So, before we can write or understand a compiler, we need to know how machines process structured input which is exactly what automata theory teaches us